RNN – How Recurrent Neural Networks Work

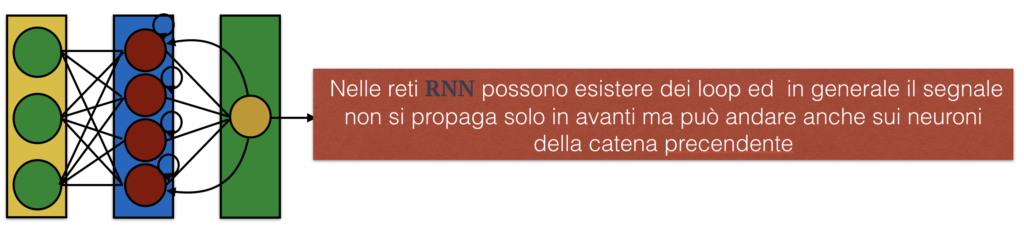

RNNs or Recurrent Neural Networks are very interesting because, unlike the feed forward networks, where the information/signal can only travel in one direction and each neuron can be connected to one or more neurons in the next chain,

in this type of network, neurons can also have loops and/or be connected to neurons from a previous level.

Recurrent networks essentially allow backward or same-level connections. This feature makes this type of neural network very interesting because the concept of recurrence intrinsically introduces the concept of memory in a network. In an RNN, in fact, the output of a neuron can influence itself at a subsequent time step or can influence neurons from the previous chain which in turn will interfere with the behavior of the neuron closing the loop. Obviously, there is not just one way to implement an RNN; over time, various types of RNNs have been proposed and studied, among the most famous are those based on LSTM (Long Short Term Memory) and GRU (Gated Recurrent Units), which we will discuss later.

When can or should we use RNNs?

Let’s take an example to understand the context well. If I were analyzing a photo, where I wanted to observe, for example, objects of interest, I would provide a neural network with static information over time, i.e., the photo’s pixels. This scenario is easily manageable through a feed-forward network, for instance, a properly trained CNN, whose input layer consists of as many neurons as there are pixels to process multiplied by 3 if I were using RGB color images.

But what should I do if, instead of a static image, I had a video? If I wanted to recognize objects once again, nothing would change, and I could continue to use a feed-forward network. However, if I wanted to observe a behavior, things would be completely different.

Recognizing a behavior implies analyzing an action over time, and only the collection of information can give us an indication of the type of behavior exhibited. Recognizing a person in a video is one thing, but understanding if, for example, the person is drinking or blowing into a bottle is quite different.

To better understand the importance of the time sequence, consider sign language. Recognizing only the hand is not enough; understanding the sequence of movements is essential to interpret the gesture’s meaning.

Our goal will therefore be to understand how to process dynamic information over time and learn how to build and train a memory network (RNN) so that it can observe changes and recognize different actions. As we have already mentioned several times, the solution to our problem is the RNN, which in its simplest form can be schematized as an RNN cell. Let’s see what it’s about.

Before explaining the RNN cell, we must understand that they operate over time, so unlike classic feed-forward networks where the provided data was static, the type of data that an RNN can handle is a time sequence or time series. Let’s provide some examples.

A time sequence or series can be considered a function sampled at multiple time instances. It could be a voice waveform sample, the trend of a stock title, or a sentence.

For a sequence consisting of a sentence, the samples will be individual words appropriately coded. In this case, the RNN cell will receive, over time, the individual words, which will also be processed based on the previous word or the previous words, depending on the type of RNN cell being used.

In summary, in the RNN cell at each time t, the layer will receive both the input X(t) and its output S(t-1). The feedback of the output will allow the network to base its decisions on its past history. In this approach, it is evident that it will be essential to set the maximum number of iterations to handle; otherwise, the network could easily enter a loop.

Understanding that an RNN can be represented by a cell with a finite state loop, let’s try to understand how it can be trained.

We introduce the concept of unfolding the network, which essentially translates into transforming an RNN into a feed-forward type. If we look at the following figure, we can easily see that the RNN has become a feed-forward neural network.

Let’s try to formalize the concept mentioned above. An RNN cell is a part of a recurring network that preserves an internal state h(t) for each time instance. It consists of a fixed number of neurons and can be considered as a kind of network level. In our network, the output at each moment will be where

depends on input

and the previous state

.

In conclusion, proceeding with the unfolding of the network means setting in advance the number of time steps on which to perform the analysis. Therefore, an RNN unfolded over 10 steps is equivalent to a DNN feed-forward with 10 levels.

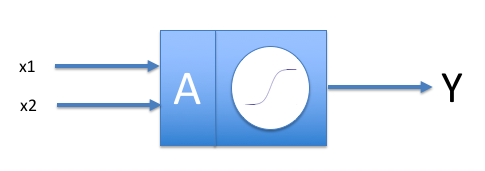

If we briefly review the functioning of a neuron with two inputs, we’ll remember that the output function, as explained in the article dedicated to feed-forward networks, is something like:

while

, where the sigmoid in this case is the activation function, which we’ll generally call

, and b=0.

Applying the same reasoning to the RNN we get: where

and

are the weights, b is the bias, and

is the activation function.

The simplest cells, as stated, will have a limit in their learning capacity, that is, in remembering the inputs of distant steps. This limit could become a problem when there is a need to keep track of distant events. In the case of a sentence, for example, the context of the words becomes fundamental. In a sentence, in fact, not only the presence of specific words is relevant, but it is also important how they are related to each other. Therefore, remembering only the immediately preceding word in a sequence of words adds no value. In this case, we will need more complex memory cells, such as LSTM or GRU, which, however, we will discuss in the next article.

I am passionate about technology and the many nuances of the IT world. Since my early university years, I have participated in significant Internet-related projects. Over the years, I have been involved in the startup, development, and management of several companies. In the early stages of my career, I worked as a consultant in the Italian IT sector, actively participating in national and international projects for companies such as Ericsson, Telecom, Tin.it, Accenture, Tiscali, and CNR. Since 2010, I have been involved in startups through one of my companies, Techintouch S.r.l. Thanks to the collaboration with Digital Magics SpA, of which I am a partner in Campania, I support and accelerate local businesses.

Currently, I hold the positions of:

CTO at MareGroup

CTO at Innoida

Co-CEO at Techintouch s.r.l.

Board member at StepFund GP SA

A manager and entrepreneur since 2000, I have been:

CEO and founder of Eclettica S.r.l., a company specializing in software development and System Integration

Partner for Campania at Digital Magics S.p.A.

CTO and co-founder of Nexsoft S.p.A, a company specializing in IT service consulting and System Integration solution development

CTO of ITsys S.r.l., a company specializing in IT system management, where I actively participated in the startup phase.

I have always been a dreamer, curious about new things, and in search of “new worlds to explore.”

Comments